Research Goals

We aim to develop safe and interactive autonomous systems, looking at methods for modeling and predicting human behavior, designing robust decision and control frameworks, and validating complex, multi-agent systems to verify safety. Active research topics include:

Check out our publication page to learn more about our recent work!

Papers by Topic

Traversing Supervisor Problem: An Approximately Optimal Approach to Multi-Robot Assistance. Ji et al., RSS 2022.

Proactive Anomaly Detection for Robot Navigation with Multi-Sensor Fusion. Ji et al., RA-L 2022.

Examining Audio Communication Mechanisms for Supervising Fleets of Agricultural Robots. Kamboj et al., RO-MAN 2022.

Structural Attention-based Recurrent Variational Autoencoder for Highway Vehicle Anomaly Detection. Chakraborty et al., AAMAS 2023.

An Attentional Recurrent Neural Network for Occlusion-Aware Proactive Anomaly Detection in Field Robot Navigation. Schreiber et al., IROS 2023.

Proactive Anomaly Detection for Robot Navigation with Multi-Sensor Fusion. Ji et al., RA-L 2022.

Multi-Modal Anomaly Detection for Unstructured and Uncertain Environments. Ji et al., CoRL 2020.

PeRP: Personalized Residual Policies For Congestion Mitigation Through Co-operative Advisory Systems. Hasan et al., ITSC 2023.

Combining Planning and Deep Reinforcement Learning in Tactical Decision Making for Autonomous Driving. Hoel et al., Trans. ITS, 2020.

Learning to Navigate Intersections with Unsupervised Driver Trait Inference. Liu et al., ICRA 2022.

Dynamic Environment Prediction in Urban Scenes using Recurrent Representation Learning. Itkina et al., ITSC 2019.

Multi-Agent Variational Occlusion Inference Using People as Sensors. Itkina et al., ICRA 2022.

Online Monitoring for Safe Pedestrian-Vehicle Interactions. Du et al., ITSC 2020.

Finding Diverse Failure Scenarios in Autonomous Systems Using Adaptive Stress Testing. Du et al., SAE 2019.

Robust Model Predictive Control with State Estimation under Set-Membership Uncertainty. Ji et al., CDC 2022.

Improving the Feasibility of Moment-Based Safety Analysis for Stochastic Dynamics. Du et al., TAC 2022.

PeRP: Personalized Residual Policies For Congestion Mitigation Through Co-operative Advisory Systems. Hasan et al., ITSC 2023.

Hierarchical Intention Tracking for Robust Human-Robot Collaboration in Industrial Assembly Tasks. Huang et al., ICRA 2023.

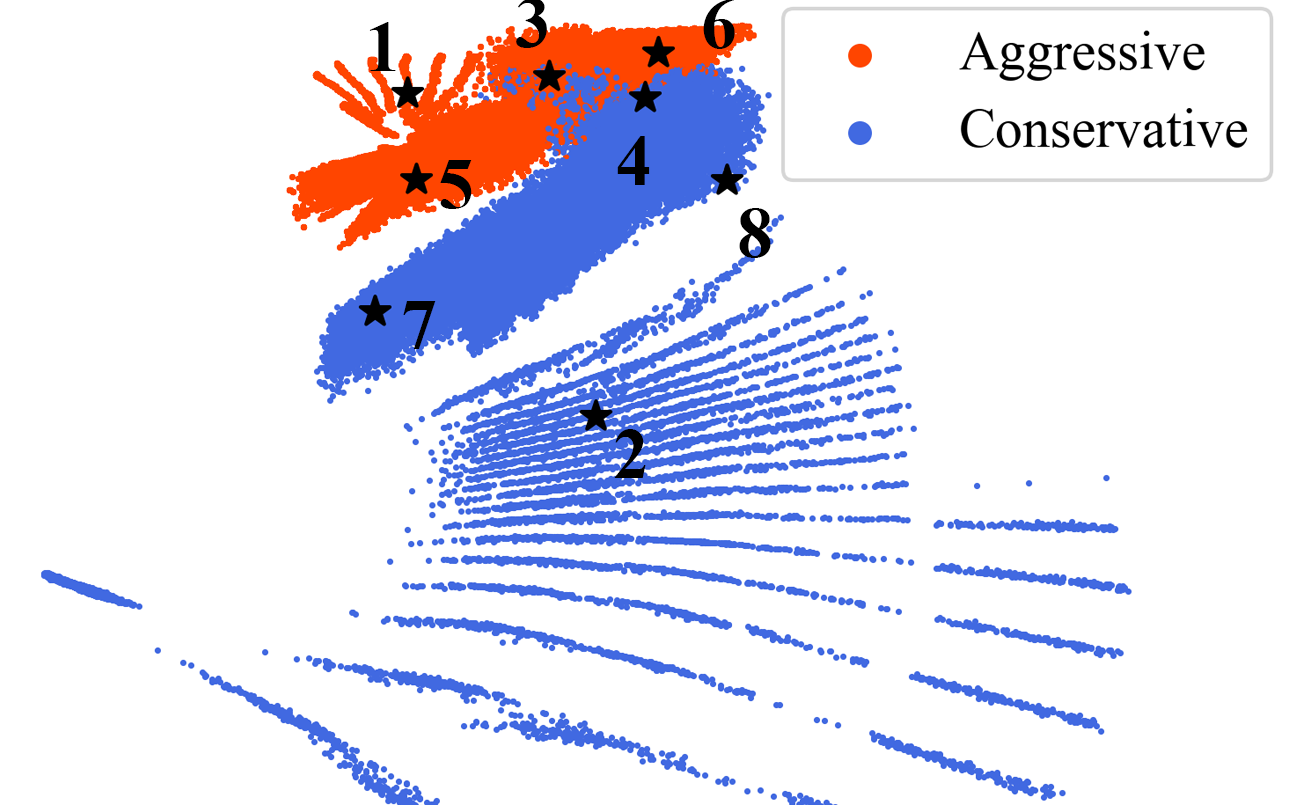

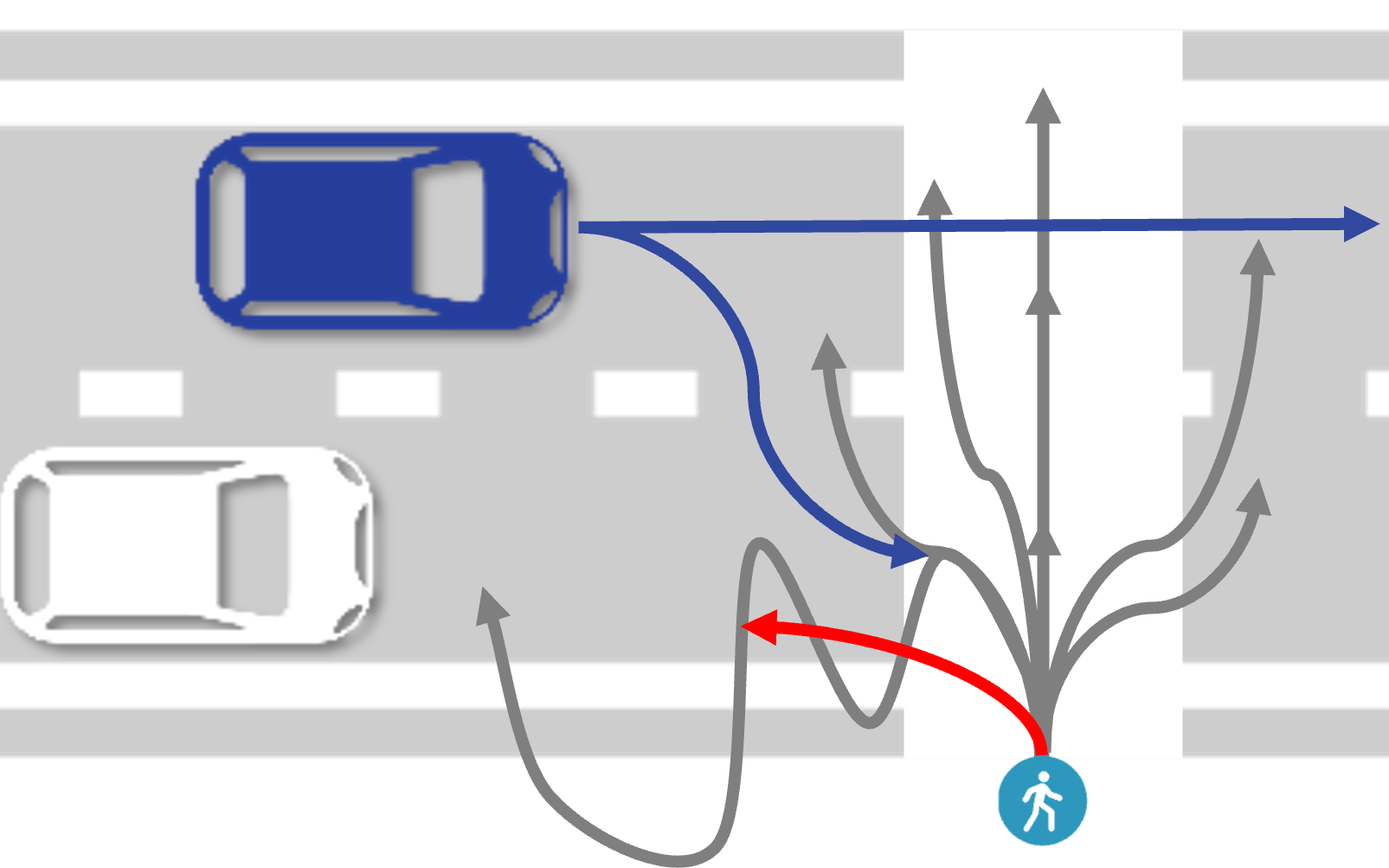

Learning to Navigate Intersections with Unsupervised Driver Trait Inference. Liu et al., ICRA 2022.

Multi-Agent Variational Occlusion Inference Using People as Sensors. Itkina et al., ICRA 2022.

Traversing Supervisor Problem: An Approximately Optimal Approach to Multi-Robot Assistance. Ji et al., RSS 2022.

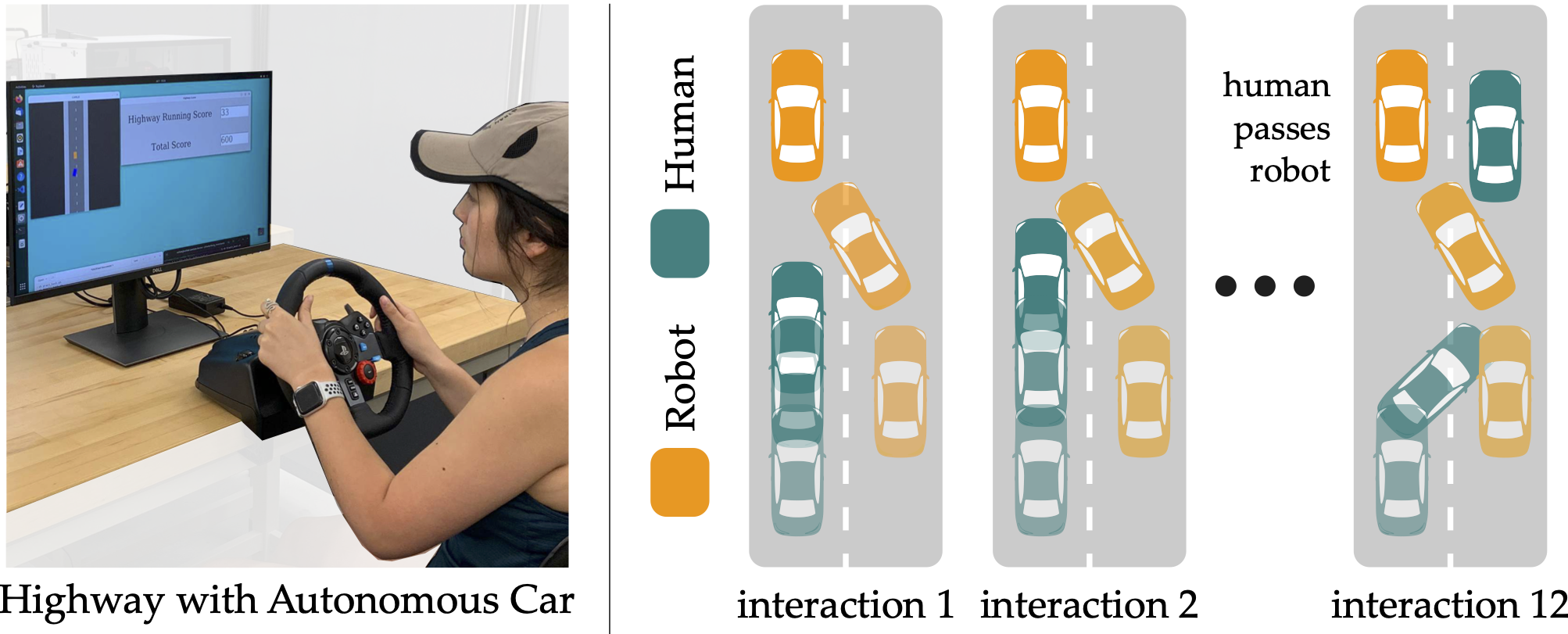

Towards Robots that Influence Humans over Long-Term Interaction. Sagheb et al., ICRA 2023.

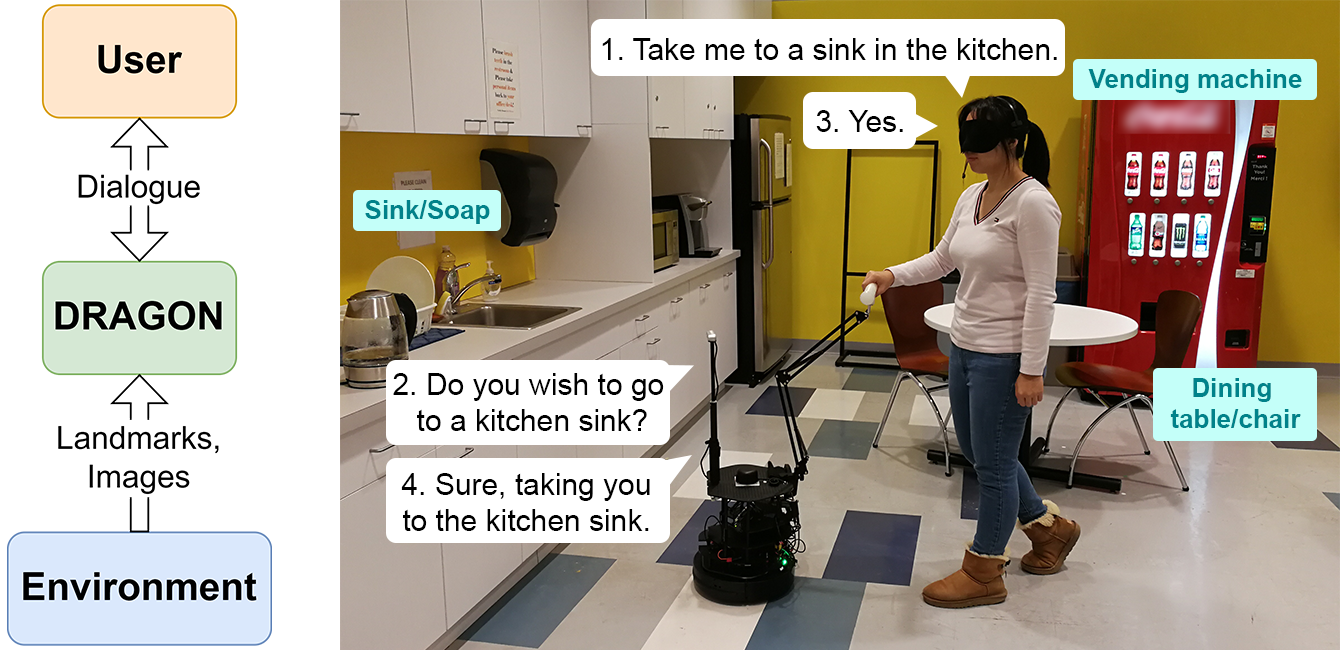

A Dialogue-Based Robot for Assistive Navigation with Visual Language Grounding. Liu et al, RA-L 2024

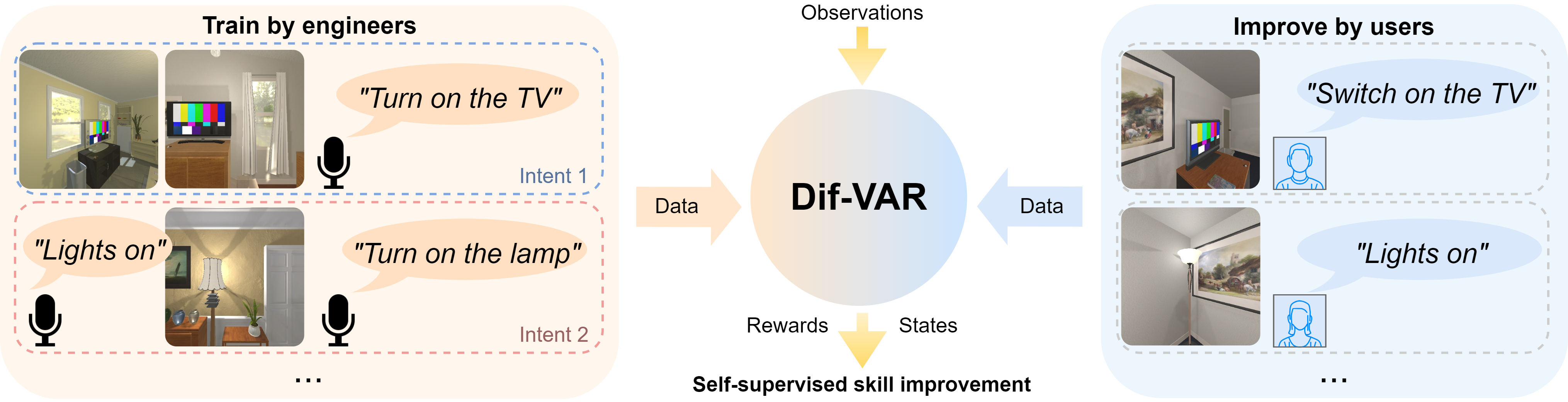

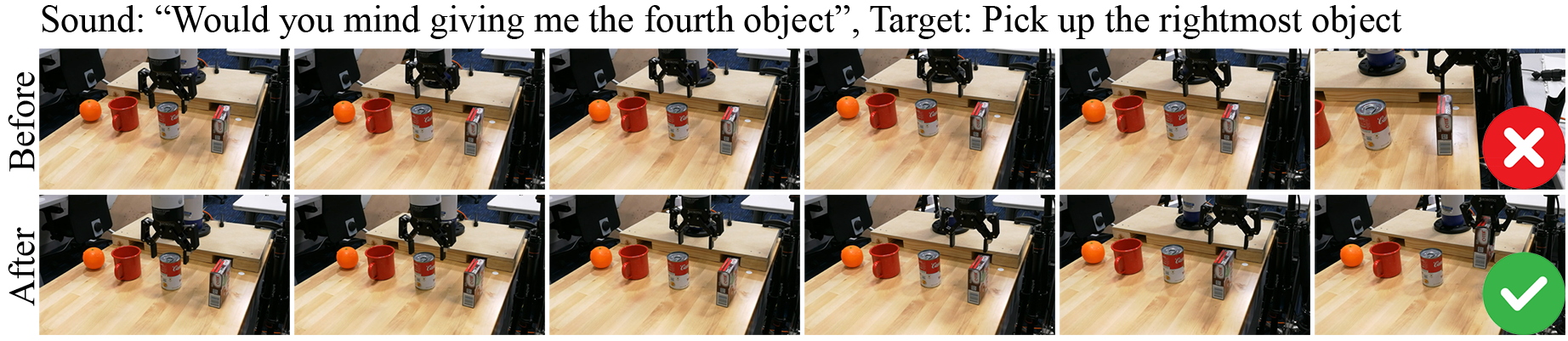

A Data-Efficient Visual-Audio Representation with Intuitive Fine-tuning for Voice-Controlled Robots. Chang et al., CoRL 2023.

Learning Visual-Audio Representations for Voice-Controlled Robots. Chang et al., ICRA 2023.

Robot Sound Interpretation: Combining Sight and Sound in Learning-Based Control. Chang et al., IROS 2020.

Predicting Object Interactions with Behavior Primitives: An Application in Stowing Tasks. Chen et al., CoRL 2023.

Combining Model-Based Controllers and Generative Adversarial Imitation Learning for Traffic Simulation. Chen et al., ITSC 2022.

EnsembleDAgger: A Bayesian Approach to Safe Imitation Learning. Menda et al., IROS 2019.

HG-DAgger: Interactive Imitation Learning with Human Experts. Kelly et al., ICRA 2019.

Simulating Emergent Properties of Human Driving Behavior Using Multi-Agent Reward Augmented Imitation Learning. Bhattacharyya et al., ICRA 2019.

Occlusion-Aware Crowd Navigation Using People as Sensors. Mun et al., ICRA 2023.

Multi-Agent Variational Occlusion Inference Using People as Sensors. Itkina et al., ICRA 2022.

An Attentional Recurrent Neural Network for Occlusion-Aware Proactive Anomaly Detection in Field Robot Navigation. Schreiber et al., IROS 2023.

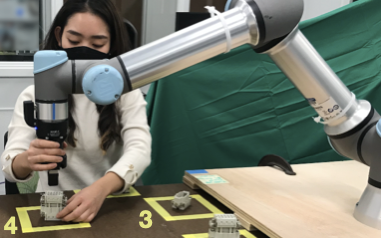

Hierarchical Intention Tracking for Robust Human-Robot Collaboration in Industrial Assembly Tasks. Huang et al., ICRA 2023.

Predicting Object Interactions with Behavior Primitives: An Application in Stowing Tasks. Chen et al., CoRL 2023.

Neural Informed RRT* with Point-based Network Guidance for Optimal Sampling-based Path Planning. Huang et al, ICRA 2024.

Traversing Supervisor Problem: An Approximately Optimal Approach to Multi-Robot Assistance. Ji et al., RSS 2022.

Combining Planning and Deep Reinforcement Learning in Tactical Decision Making for Autonomous Driving. Hoel et al., Trans. ITS, 2020.

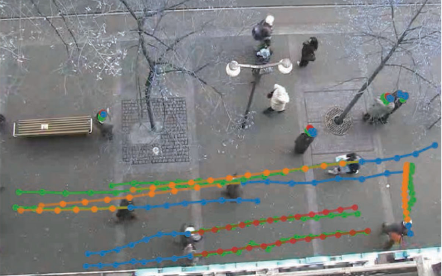

Meta-path Analysis on Spatio-Temporal Graphs for Pedestrian Trajectory Prediction. Hasan et al., ICRA 2022.

Learning Sparse Interaction Graphs of Partially Detected Pedestrians for Trajectory Prediction. Huang et al., RA-L, 2021.

Long-term Pedestrian Trajectory Prediction using Mutable Intention Filter and Warp LSTM. Huang et a., RA-L 2020.

Dynamic Environment Prediction in Urban Scenes using Recurrent Representation Learning. Itkina et al., ITSC 2019.

PeRP: Personalized Residual Policies For Congestion Mitigation Through Co-operative Advisory Systems. Hasan et al., ITSC 2023.

A Data-Efficient Visual-Audio Representation with Intuitive Fine-tuning for Voice-Controlled Robots. Chang et al., CoRL 2023.

Learning Visual-Audio Representations for Voice-Controlled Robots. Chang et al., ICRA 2023.

Robot Sound Interpretation: Combining Sight and Sound in Learning-Based Control. Chang et al., IROS 2020.

Decentralized Structural-RNN for Robot Crowd Navigation with Deep Reinforcement Learning. Liu et al., ICRA 2021.

Combining Planning and Deep Reinforcement Learning in Tactical Decision Making for Autonomous Driving. Hoel et al., Trans. ITS, 2020.

Efficient Equivariant Transfer Learning from Pretrained Models. Basu & Katdare et al., NeurRIPS 2023.

Monte Carlo Tree Search for Policy Optimization. Ma et al., IJCAI 2019.

Marginalized Importance Sampling for Off-Environment Policy Evaluation. Katdare et al., CoRL 2023.

Off Environment Evaluation Using Convex Risk Minimization. Katdare et al., ICRA 2022.

Adaptive Failure Search Using Critical States from Domain Experts. Du et al., ICRA 2021.

Adaptive Stress Testing with Reward Augmentation for Autonomous Vehicle Validation. Corso & Du et al., ITSC 2019.

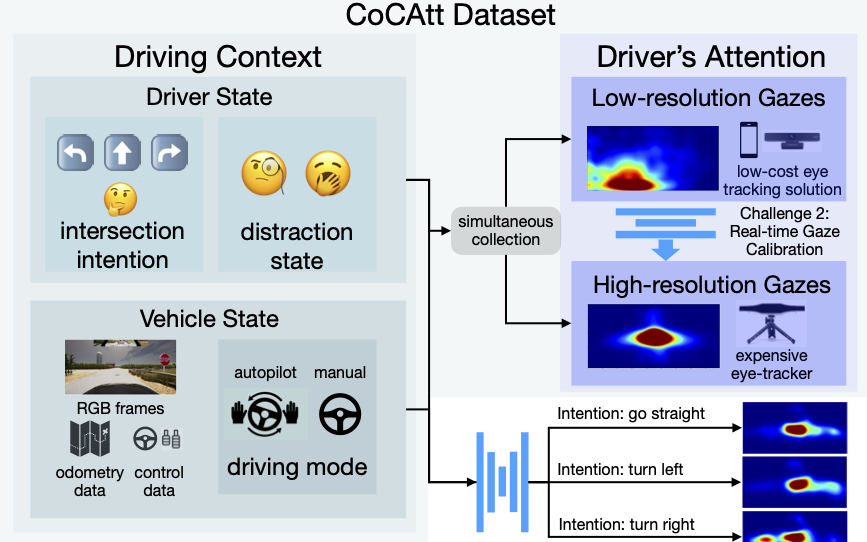

CoCAtt: A Cognitive-Conditioned Driver Attention Dataset. Shen et al., ITSC 2022.

An Interdisciplinary Approach: Potential for Robotic Support to Address Wayfinding Barriers Among Persons with Visual Impairments. Bayles et al., HFES 2022.

Examining Audio Communication Mechanisms for Supervising Fleets of Agricultural Robots. Kamboj et al., RO-MAN 2022.

Modeling Human Behavior

To better understand human behavior, we model different aspects of the human decision-making process abd future motions, which can in turn inform how our robots should respond and interact.

Intent Inference

Human intent can be interpreted as goals that guide decision-making and motion. We examine the hierarchy and the relationships between high and low-level goals through probabilistic graphs. We demonstrate how this can improve collaboration in terms of safety and joint task performance.

Trait Estimation

People’s internal processes and traits determine how different actions are performed. We consider different representations of hidden traits (e.g., aggressive or conservative, continuous parameters), and the downstream impact on advisory systems and interactive navigation.

Motion Prediction

Future trajectories are crucial for safe navigation in human-dominated environments. We use insights from intent and trait estimation to better predict future motions of human agents. We also explicitly consider common real-world issues like partial observability and selective influence.

Human-Centered Robotics

We consider different facets of designing robots for humans. We consider how they may intuitively be used,

the impacts of long-term interaction, and how we can learn from humans.

Influence in Repeated Interactions

Whenever robots work near humans their actions inevitably affect the human’s behavior. Today’s algorithms focus on influencing humans in the short-term: although these methods change the human’s immediate behavior, over time humans adapt to the robot in unexpected ways. To beneficially influence humans across life-long interaction, robot controllers must continually co-adapt.

Voice-Controlled Robots

Natural language is an intuitive way to interact with and command robots. However, to be effectively used by non-experts, these robots must be continually continually improved with minimal help from its end users, instead of engineers. We explore novel representations that capture commands directly from speech and goals to both complete known tasks and adapt to new environments.

Learning from Demonstrations

Learning from humans allows robots to behave in natural and intuitive ways, assuming we can design algorithms that can elicit meaningful demonstrations in a sample efficient manner. We develop algorithmic approaches that consider how the human can provide better and more informative data as well as how we can impose structure in the human motion or the environment interactions.

Autonomous Vehicles

We focus on two major challenges facing autonomous vehicles: How do we understand and interact with human agents? How do we assess how safe the overall system is before deployment?

Safety Validation with AST

Determining possible failure scenarios is a critical step in the evaluation of autonomous vehicles. We have developed a tool called Adaptive Stress Testing (AST), which formulates the problem of finding the most likely failure scenarios as a Markov decision process, which can be solved using reinforcement learning to efficiently search simulation spaces.

Planning for Interactive Autonomy

Planning and decision-making for autonomous driving is challenging due to the diversity of environments and the complex interaction with other road users. We explore how we can use insights from traditional planning with state-of-the-art learning to improve overall performance.

Modeling Driver Behaviors

In addition to general human modeling, we consider driver specific considerations, like the impact of communication and the role of automation on driver attention. This work relies heavily on human studies.

Social Navigation

We consider how to best capture interactions between agents to improve decision-making and planning in complex environments. We also look at interesting use-cases, like assistive guidance for people with visual impairments.

Crowd Navigation

We study the problem of safe and interaction-aware robot navigation. We examine new ways we can encode interaction, specifically looking at graph representations to capture interaction and influence between different agents. We also look at how to incorporate insight from motion prediction algorithms to more explicitly encourage foresight and to understand occlusions. Using reinforcement learning frameworks, we achieve a balance between safety, efficiency, and interaction. We also successfully transfer our policy to the real-world.

Wayfinding Assistant: WayBot

We are developing WayBot, a mobile robot that can provide guidance as well as environment information to Persons with visual impairments (PwVI). Motivated by recent advances in visual-language grounding and semantic navigation, we extend common navigation approaches to include a dialogue system that can both understand open-vocabulary commands and answer questions based on visual observations. We collaborate with domain experts and use participatory design strategies to design and validate WayBot.

Field Robotics

We look at how we can increase the level of autonomy in robots that operate in unstructured environments,

taking special care to consider how users will easily interact with these robots in practice.

Agricultural Robotics

We are developing approaches to increase the autonomous capabilities of agbots, specifically looking at how we can recover from failures. We have developed proxies for failure detection through anomaly detection frameworks, and identified new problems for supervisory control and fleet management.

Adaptive Traversability

Traversability estimation identifies where in the environment a robot can travel. To make this task easily deployed in new environments, we are focusing on methods easily gather labels from users. We have developed a weakly-supervised method, where a non-expert can annotate the relative traversability of a small number of point pairs, significantly reduces labeling effort.